OVERVIEW

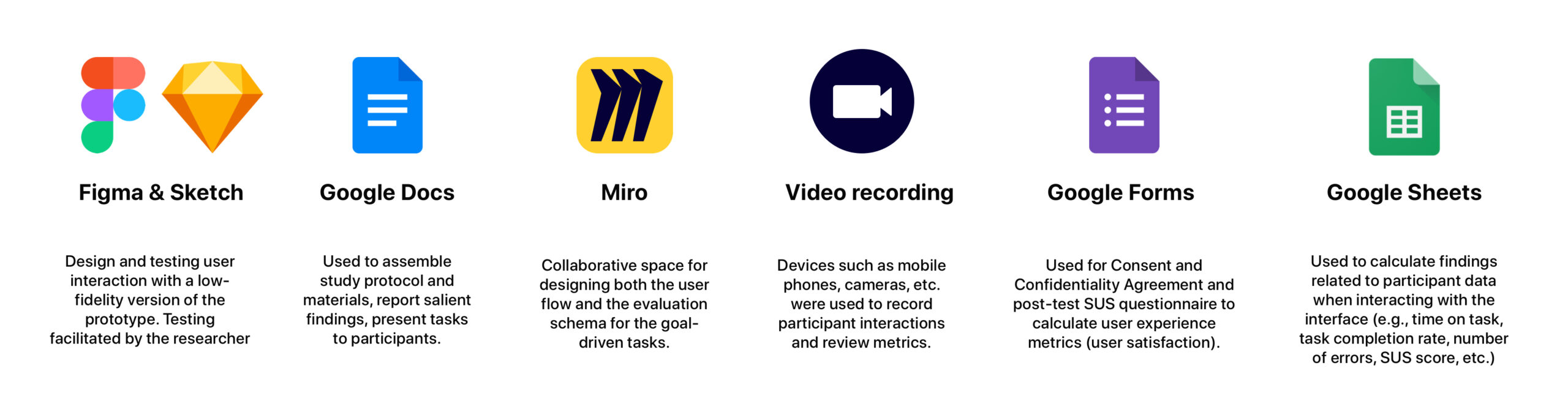

This project explored how to help adult learners of English as a Second / Foreign Language (ESL / EFL) improve upon their English-speaking skills through the use of an interactive, technological educational solution which also supports increased student motivation and engagement via remote learning.

For the purpose of this investigation, the up-and-coming langauge-learning app, called "Speakly," was evaluated and redesigned in order to better support user goals towards increasing English-language speaking fluency and ability.

The biggest barriers for many ESL / EFL learners has been and continues to be a lack of speaking opportunities and being able to get constructive feedback from native speakers and/or trained linguistic experts. At the same time, there are approximately 1.5 billion English language learners worldwide.

English has become the universal language in business, science, and media due to increased globalization. Learning English has become not just a “useful” skill to have, but it has become a necessary skill to compete and communicate in a global economic, digital and, social environment.

This project aimed to explore how the Speakly iOS mobile app, based on preliminary and investigative research methods, could be better designed in terms of new features, design elements, usability, and user experience to effectively support ESL / EFL learners in persuit of their English-fluency goals.

My role: I was the principal researcher and designer and I lead the project throughout all stages of the research and design cycle and final overseeing the final delivery | Duration: 14-Weeks | Tools: Miro, Figma, Sketch, InVision, Google Docs / Slides / Sheets, Google Meet

OVERVIEW

This project explored how to help adult learners of English as a Second / Foreign Language (ESL / EFL) improve upon their English-speaking skills through the use of an interactive, technological educational solution which also supports increased student motivation and engagement via remote learning.

For the purpose of this investigation, the up-and-coming langauge-learning app, called "Speakly," was evaluated and redesigned in order to better support user goals towards increasing English-language speaking fluency and ability.

The biggest barriers for many ESL / EFL learners has been and continues to be a lack of speaking opportunities and being able to get constructive feedback from native speakers and/or trained linguistic experts. At the same time, there are approximately 1.5 billion English language learners worldwide.

Given increasing globalization and that English is the universal language in business, science, and media, learning English has become not just a “useful” skill to have but it has become a necessary skill to compete and communicate in a global economic, digital and, social environment.

This project aimed to explore how the Speakly iOS mobile app, based on preliminary and investigative research methods, could be better designed in terms of new features, design elements, usability, and user experience to effectively support ESL / EFL learners in persuit of their English-fluency goals.

My role: I was the principal researcher and designer and I lead the project throughout all stages of the research and design cycle and final overseeing the final delivery | Duration: 14-Weeks | Tools: Miro, Figma, Sketch, InVision, Google Docs / Slides / Sheets, Google Meet

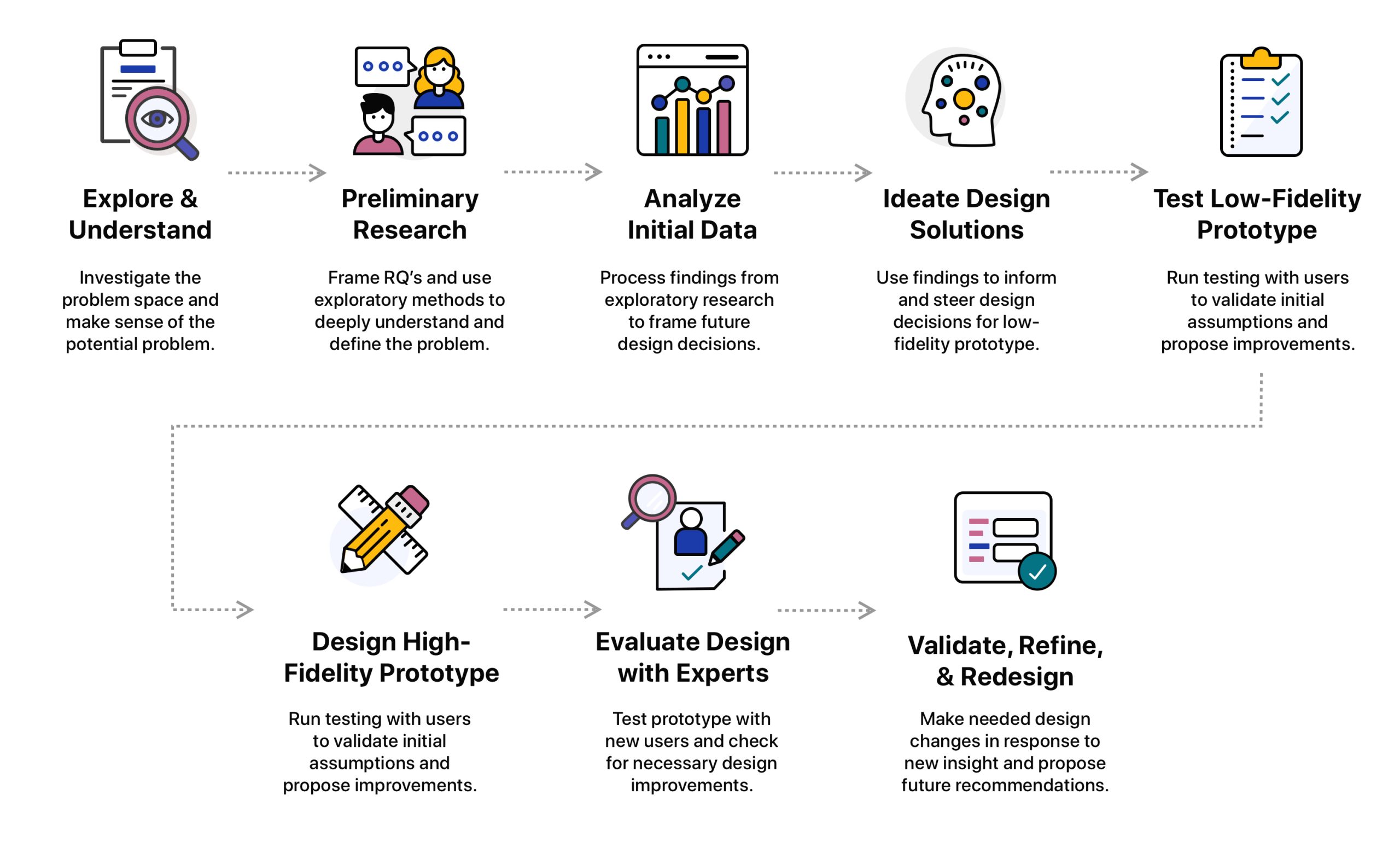

PROCESS

All aspects of the research and design process were conducted remotely from my location in the Netherlands and carried out in collaboration with colleagues located in Estonia and India in our Interaction Design Methods course. The project included the involvement of both Dutch, Estonian, and Indian participants in the context of their homes as well as remotely for the purpose of data collection.

Research Questions

RQ1: How can we help people, of all ages and demographic backgrounds, who are learning English as a Second / Foriegn Language (EFL / ESL) improve their speaking skills, which positively contributes to learner confidence and motivation, by using the Speakly mobile app?

RQ2: How can the Speakly mobile application be improved, in terms of new features and functionalities, in order to help students improve their English-speaking and communication skills through speaking practice opportunities and constructive, personalized feedback?

RQ3: How can these new features and functionalities in Speakly be designed in alignment with the principles of Self-Determination Theory (SDT) (Deci and Ryan, 2013) to increase the potential for greater student engagement and motivation throughout the learning experience?

PROCESS

All aspects of the research and design process were conducted remotely from my location in the Netherlands and carried out in collaboration with colleagues located in Estonia and India in our Interaction Design Methods course. The project included the involvement of both Dutch, Estonian, and Indian participants in the context of their homes as well as remotely for the purpose of data collection.

Research Questions

RQ1: How can we help people, of all ages and demographic backgrounds, who are learning English as a Second / Foriegn Language (EFL / ESL) improve their speaking skills, which positively contributes to learner confidence and motivation, by using the Speakly mobile app?

RQ2: How can the Speakly mobile application be improved, in terms of new features and functionalities, in order to help students improve their English-speaking and communication skills through speaking practice opportunities and constructive, personalized feedback?

RQ3: How can these new features and functionalities in Speakly be designed in alignment with the principles of Self-Determination Theory (SDT) (Deci and Ryan, 1985) to increase the potential for greater student engagement and motivation throughout the learning experience?

METHODOLOGY

(1) Preliminary research goals:

Explore user needs and deeply understand their values and expectations

This concerned the experiences, attitudes, beliefs, and values with regard to people who have, either in the past, or continuously in the present, taken part in learning English as a Second Language (ESL) and/or English as a Foreign Language (EFL). We also sought to understand how e-Learning has, or continues to, play a role in participants’ lives when it comes to learning, studying, and practicing English.

Design a technological solution driven by holistic understanding

This soltution should support English language-learners and positively stimulate motivation and engagement in the learning process among students through their educational journey. A sub-aim was that the technology could increase the effectiveness and impact of ESL tutors by empowering students and offering a more stimulating and enjoyable English-language learning experience.

(2) Methods and Data Collection:

Due to the fact that we were limited to both time, resource, and geographical constraints, we had to get creative and we utilized a mixed method interview approach using a combination of the epistolary (asynchronous) interview method and in-person / remote interviews. This allowed for a very flexible, efficient, and optimized means of data-collection that accomodated both the needs of us as researchers and the unique situational and availability needs of the participants.

For this process, I designed a semi-structured interview protocol to be followed as a script for each interview. Before the commencement of the interview, participants were instructed to complete a Consent and Confidentiality Agreement which gives an overview of the study, expectations, and reassurance towards participant privacy. Upon completion of the interview, participants were directed to complete a short demographic survey and questionnaire. These answers provided further information for defining our personas.

The interviews were conducted both asynchronously using various text-based chat platforms such as Slack, WhatsApp, iMessage, e-mail, etc and synchronously using Google Meet, FaceTime, Zoom, or in-person. It was left to the discretion of the interviewer and interviewee to decide upon which method (asynchronous and/or synchronous) was most appropriate and convenient for their interviews.

Epistolary interviews means being conducted “by letter.” The benefits of such interviews are that they are convenient and flexible where respondents respond meaningfully and thoughtfully at times that work best for them (Debenham, 2007). Additionally, “The method allows researchers to conduct several interviews at the same time, eliminates the need for transcription, and addresses internet reliability issues. Common approaches include email, custom apps, letters, and private message boards/instant messaging services” (McMaster University, 2021).

In-person and remote interviews are advantageous in that it allows for a deeper connection between researchers and participants in establishing rapport and building a stronger human connection (Design Kit, 2022; Nielsen Norman Group, 2018). From the interviewing process we can get a better understanding of what people’s goals, expectations, experiences, and values are in answering the deeper why and how questions.

(3) Participant selection and recruitment

Participants were selected via convenience sampling on the basis of their previous social connection with the researchers. Although this method poses a risk of bias in the findings, it does allow for more genuine and authentic feedback from participants due to previously established rapport. It also was an appropriate method given budgetary, time, and resource constraints. Inclusion and exclusion criteria:

To qualify for this study, participants needed to...

- Be aged 18+ or older (no specific age limit)

- Live anywhere in the world (not region specific)

- Have access to a smart phone and stable internet connection

- Have some grasp (A1 and above) of English as their second (third, or forth, etc.) language

- Have had some experience learning English (ESL or EFL), self-study or courses

- Participation in past language courses was not required

- Be available to participate in remote or on-site interviews (1-hour)

- (Or) be available to participate in asynchronous (chat-based) interviews (1-3 day conversation)

METHODOLOGY

(1) Preliminary research goals:

Explore user needs and deeply understand their values and expectations

This concerned the experiences, attitudes, beliefs, and values with regard to people who have, either in the past, or continuously in the present, taken part in learning English as a Second Language (ESL) and/or English as a Foreign Language (EFL). We also sought to understand how e-Learning has, or continues to, play a role in participants’ lives when it comes to learning, studying, and practicing English.

Design a technological solution driven by holistic understanding

This soltution should support English language-learners and positively stimulate motivation and engagement in the learning process among students through their educational journey. A sub-aim was that the technology could increase the effectiveness and impact of ESL tutors by empowering students and offering a more stimulating and enjoyable English-language learning experience.

(2) Methods and Data Collection:

Due to the fact that we were limited to both time, resource, and geographical constraints, we had to get creative and we utilized a mixed method interview approach using a combination of the epistolary (asynchronous) interview method and in-person / remote interviews. This allowed for a very flexible, efficient, and optimized means of data-collection that accomodated both the needs of us as researchers and the unique situational and availability needs of the participants.

For this process, I designed a semi-structured interview protocol to be followed as a script for each interview. Before the commencement of the interview, participants were instructed to complete a Consent and Confidentiality Agreement which gives an overview of the study, expectations, and reassurance towards participant privacy. Upon completion of the interview, participants were directed to complete a short demographic survey and questionnaire. These answers provided further information for defining our personas.

The interviews were conducted both asynchronously using various text-based chat platforms such as Slack, WhatsApp, iMessage, e-mail, etc and synchronously using Google Meet, FaceTime, Zoom, or in-person. It was left to the discretion of the interviewer and interviewee to decide upon which method (asynchronous and/or synchronous) was most appropriate and convenient for their interviews.

Epistolary interviews means being conducted “by letter.” The benefits of such interviews are that they are convenient and flexible where respondents respond meaningfully and thoughtfully at times that work best for them (Debenham, 2007). Additionally, “The method allows researchers to conduct several interviews at the same time, eliminates the need for transcription, and addresses internet reliability issues. Common approaches include email, custom apps, letters, and private message boards/instant messaging services” (McMaster University, 2021).

In-person and remote interviews are advantageous in that it allows for a deeper connection between researchers and participants in establishing rapport and building a stronger human connection (Design Kit, 2022; Nielsen Norman Group, 2018). From the interviewing process we can get a better understanding of what people’s goals, expectations, experiences, and values are in answering the deeper why and how questions.

(3) Participant selection and recruitment

Participants were selected via convenience sampling on the basis of their previous social connection with the researchers. Although this method poses a risk of bias in the findings, it does allow for more genuine and authentic feedback from participants due to previously established rapport. It also was an appropriate method given budgetary, time, and resource constraints. Inclusion and exclusion criteria:

To qualify for this study, participants needed to...

- Be aged 18+ or older (no specific age limit)

- Live anywhere in the world (not region specific)

- Have access to a smart phone and stable internet connection

- Have some grasp (A1 and above) of English as their second (third, or forth, etc.) language

- Have had some experience learning English (ESL or EFL), self-study or courses

- Participation in past language courses was not required

- Be available to participate in remote or on-site interviews (1-hour)

- (Or) be available to participate in asynchronous (chat-based) interviews (1-3 day conversation)

RESULTS AND ANALYSIS

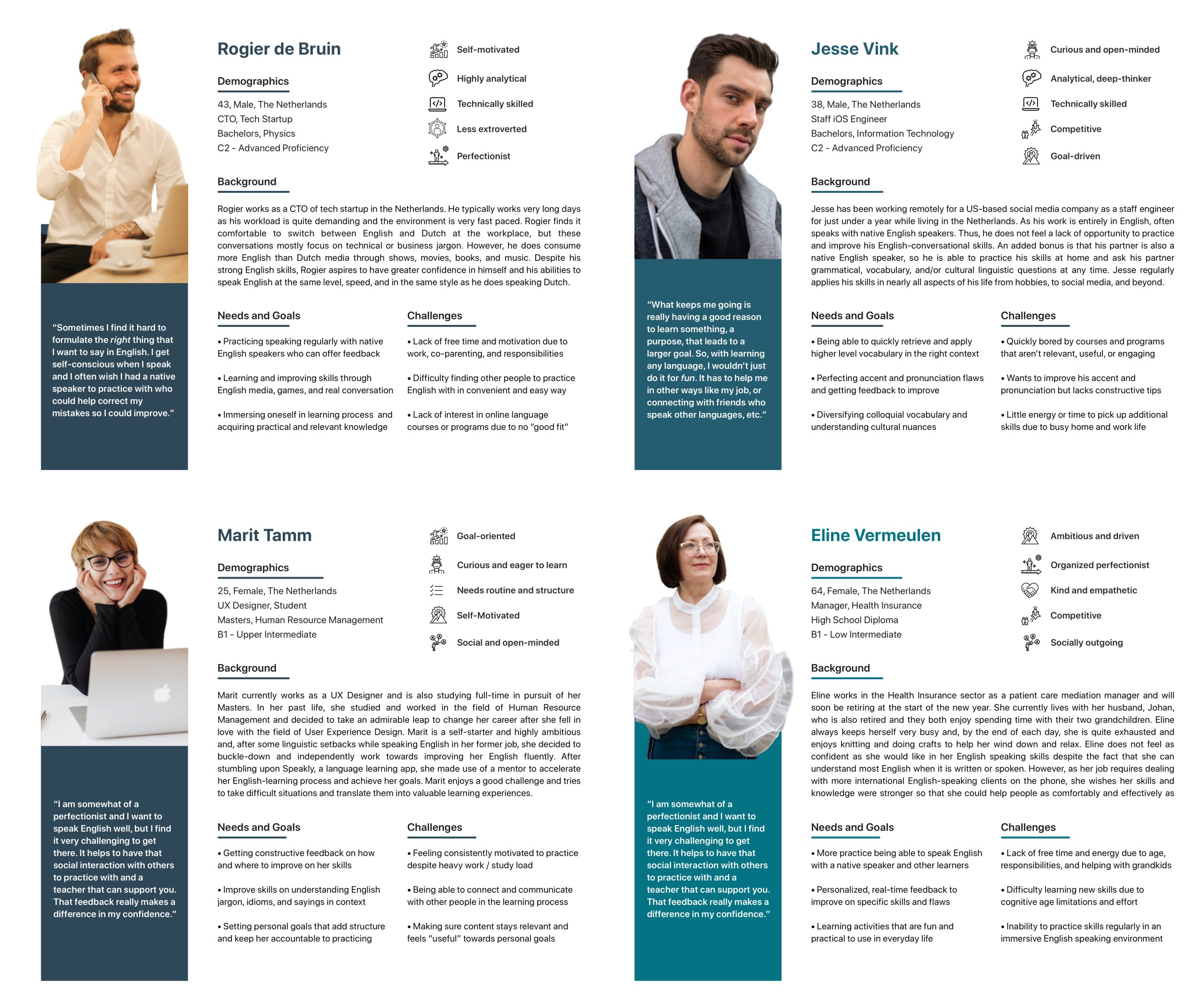

In total, we conducted 5 semi-structured interviews in English, Dutch, and Estonian with individuals (3 Dutch, 2 Estonian) from diverse backgrounds varying in age, nationality, geographic location, educational background, English proficiency level, and technical skill level. The purpose was to ensure that we got a comprehensive understanding of user needs in order to understand and empathize with a wide array of perspectives, and experiences. The data from the interviews was transcribed and coded using inductive thematic analysis results were aggregated and synthesized using Miro.

User Personas Generated from User Interview Data

RESULTS AND ANALYSIS

In total, we conducted 5 semi-structured interviews in English, Dutch, and Estonian with individuals (3 Dutch, 2 Estonian) from diverse backgrounds varying in age, nationality, geographic location, educational background, English proficiency level, and technical skill level. I conducted 4 of the 5 interviews in total.

The purpose was to ensure that we got a comprehensive understanding of user needs in order to understand and empathize with a wide array of perspectives, and experiences. The data from the interviews was transcribed and coded using inductive thematic analysis results were aggregated and synthesized using Miro.

Personas Generated from User Interview Data

Results from Interview Data Organized Using Inductive Thematic Analysis

Results from Interview Data Organized Using Inductive Thematic Analysis

IMPLICATIONS FOR DESIGN

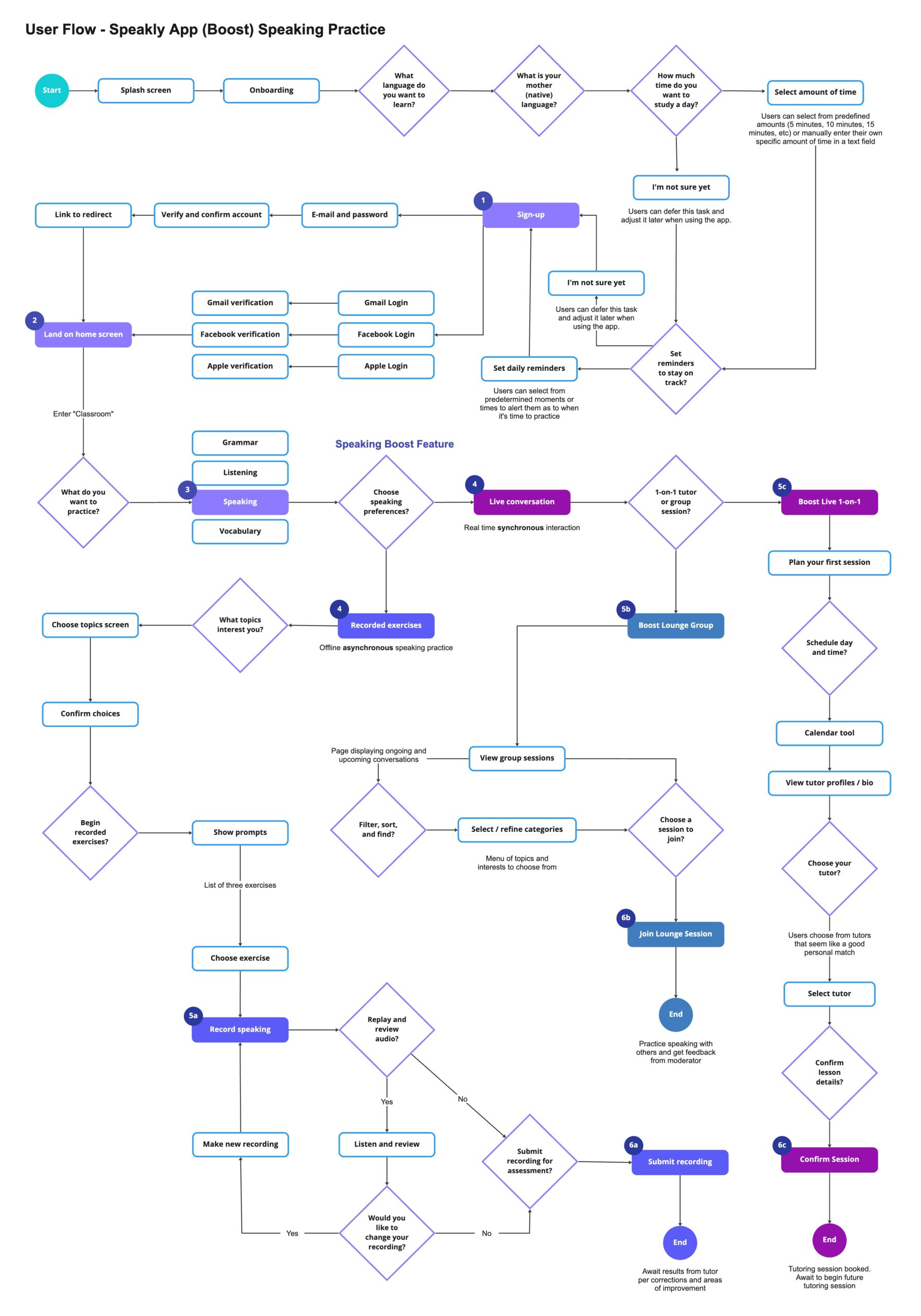

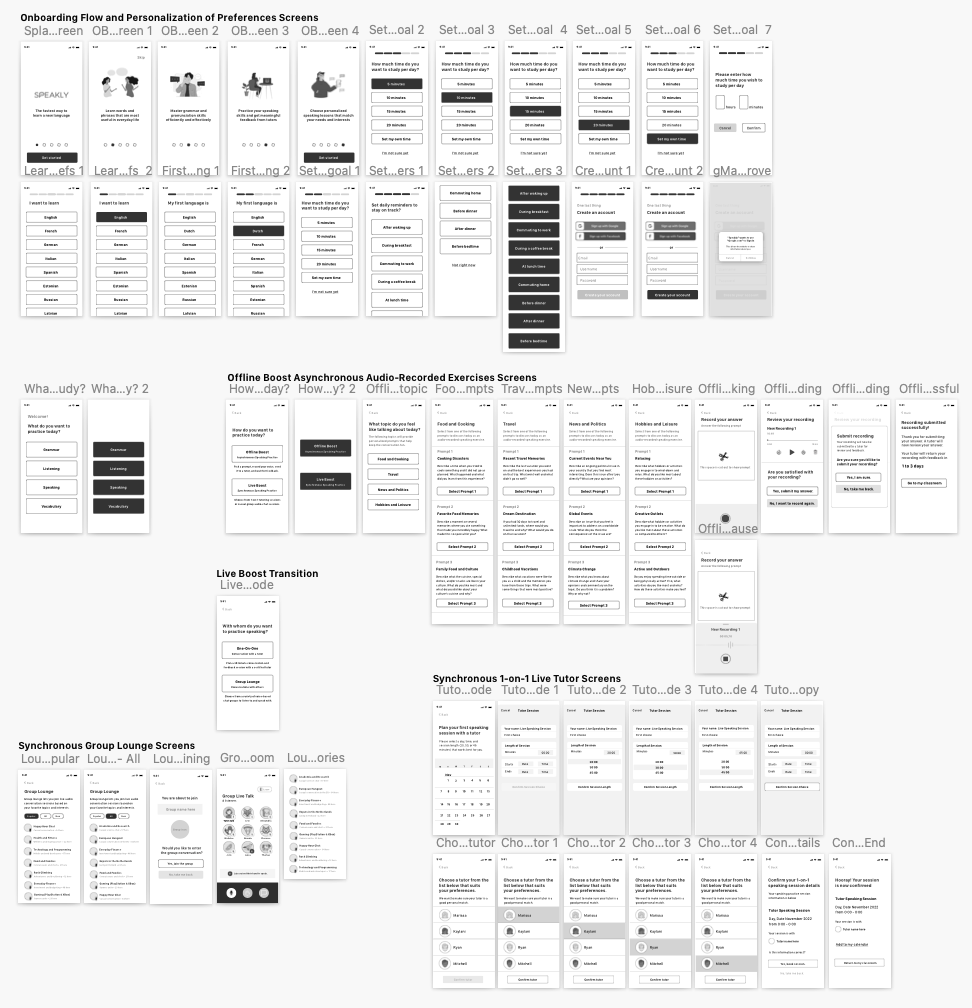

The proposed solution was called "Speaking Boost" which would be integrated into the Speakly app for iOS. This feature would offer a more flexible and personalized approach to learning through English-speaking practice that was both asynchronous and synchronous.

The problem is that the Speakly app, as it stands, does not allow users to set personal goals, users do not have autonomy over what they are learning or practicing (e.g., topics and themes are chosen by the system), and there is no opportunity to practice speaking with other learners (i.e., group sessions). In Speakly, there are paid, tutoring one-on-one sessions, but the topics are chosen by the educator and do not allow the learner to choose beforehand what the session’s conversation will focus on. Speakly prioritizes their focus on grammar and vocabulary building exercises, but lacks sufficient practical speaking opportunities.

The purpose of this feature is to assist English-learners in achieving their goals of becoming more fluent and confident in their speaking abilities through both synchronous and asynchronous modes of communication practice. Learners can choose from...

- "Offline” methods of practice which involve audio-recorded exercises that are sent to an English tutor, evaluated for errors, and returned to the learner with constructive feedback

- "Live” one-on-one tutoring sessions which are planned in advance, involve real-time practice and feedback from a qualified English tutor, and allow for retrospective reflection as the sessions are recorded; and/or

- (3) group “Lounge sessions” whereby moderators facilitate group discussions on diverse topics which allow for both active and passive conversational engagement from learners, social connection, and shared group learning opportunities.

IMPLICATIONS FOR DESIGN

The proposed solution was called "Speaking Boost" which would be integrated into the Speakly app for iOS. This feature would offer a more flexible and personalized approach to learning through English-speaking practice that was both asynchronous and synchronous.

The problem is that the Speakly app, as it stands, does not allow users to set personal goals, users do not have autonomy over what they are learning or practicing (e.g., topics and themes are chosen by the system), and there is no opportunity to practice speaking with other learners (i.e., group sessions).

In Speakly, there are paid, tutoring one-on-one sessions, but the topics are chosen by the educator and do not allow the learner to choose beforehand what the session’s conversation will focus on. Speakly prioritizes their focus on grammar and vocabulary building exercises, but lacks sufficient practical speaking opportunities.

The purpose of this feature is to assist English-learners in achieving their goals of becoming more fluent and confident in their speaking abilities through both synchronous and asynchronous modes of communication practice.

Learners can choose from...

- "Offline” methods of practice which involve audio-recorded exercises that are sent to an English tutor, evaluated for errors, and returned to the learner with constructive feedback

- "Live” one-on-one tutoring sessions which are planned in advance, involve real-time practice and feedback from a qualified English tutor, and allow for retrospective reflection as the sessions are recorded; and/or

- Group “Lounge sessions” whereby moderators facilitate group discussions on diverse topics which allow for both active and passive conversational engagement from learners, social connection, and shared group learning opportunities.

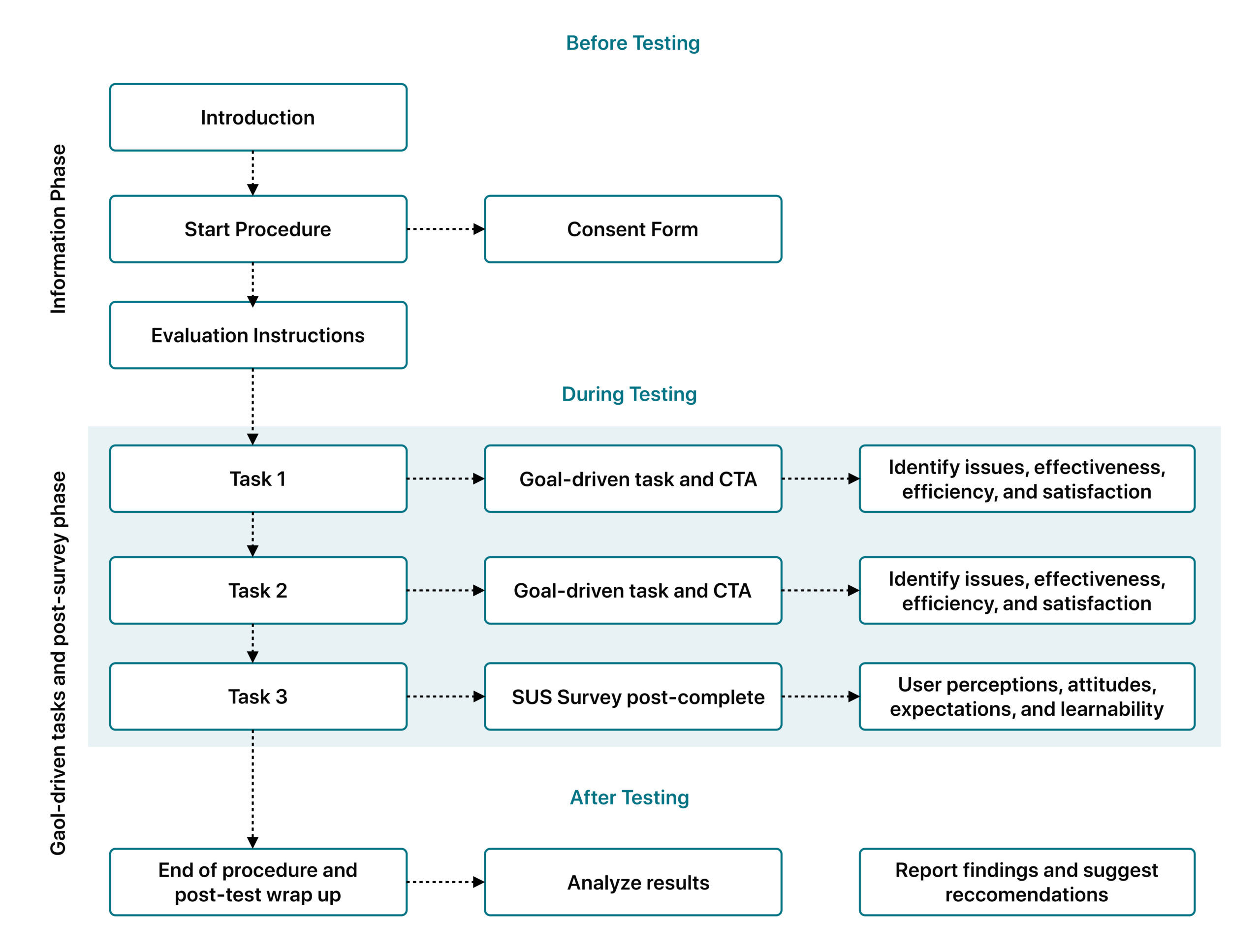

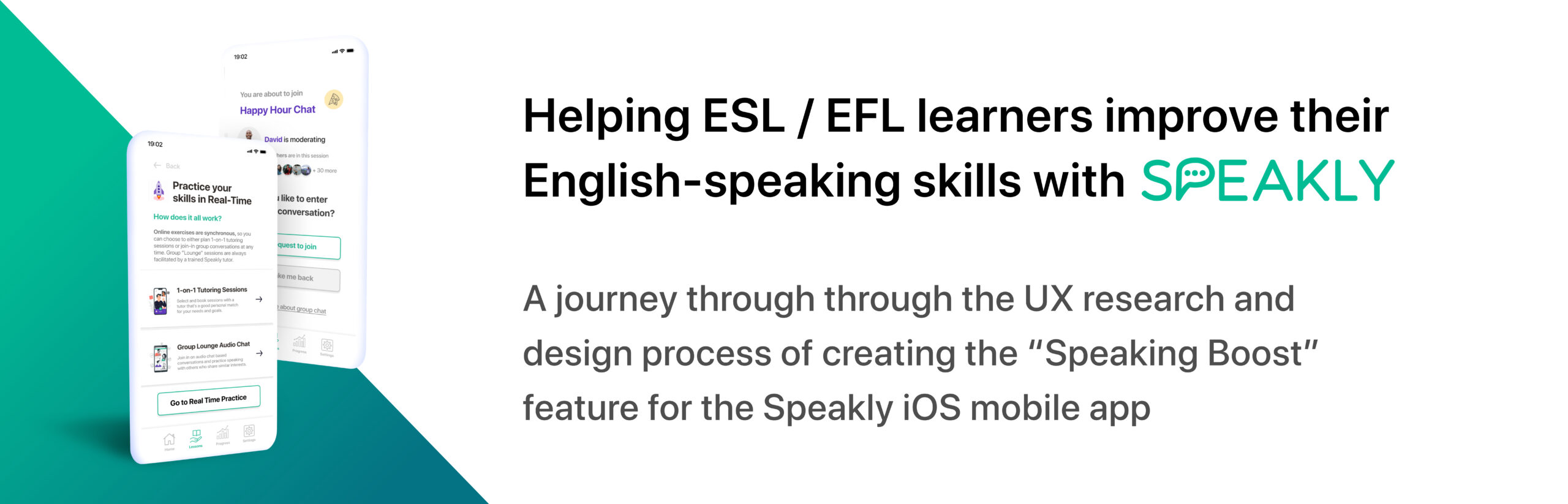

TESTING THE LOW-FIDELITY PROTOTYPE

1. Participant selection:

Participants were chosen on the basis of convenience sampling based on prior social and/or familial connections. Despite the fact that this poses sampling bias, it was necessary to utilize this method due to budget and time constraints. We could not offer monetary compensation for participants who took part in this process, thus aggregating a truly “random” sample was not feasible. However, our sample of participants included individuals (18 and older) who have in the past, or currently (ongoing) have taken part in learning English as a Second Language (ESL) or Foriegn Language (EFL). Our sample included individuals from diverse demographic, cultural, regional, professional, educational, and personal backgrounds. Inclusion criteria for participation required individuals to have a minimally A2 level (low intermediate) of English literacy skills and higher (C1 professional proficiency and/or C2 fluent).

2. Goal-driven tasks:

Participants were instructed to complete two goal driven tasks evaluating the onboarding experience. According to Toptal (2022), designing an effective user onboarding experience is absolutely critical for helping users understand what the product has to offer and if the product aligns with their individual needs and expectations. Toptal goes on to say that “nearly one in four users will abandon a mobile app after using it just once… It is highly likely that the users that ditched thought they would get something valuable from the app and weren’t immediately convinced.”

TESTING THE LOW-FIDELITY PROTOTYPE

1. Participant selection:

Participants were chosen on the basis of convenience sampling based on prior social and/or familial connections. Despite the fact that this poses sampling bias, it was necessary to utilize this method due to budget and time constraints. We could not offer monetary compensation for participants who took part in this process, thus aggregating a truly “random” sample was not feasible.

However, our sample of participants included individuals (18 and older) who have in the past, or currently (ongoing) have taken part in learning English as a Second Language (ESL) or Foriegn Language (EFL).

Our sample included individuals from diverse demographic, cultural, regional, professional, educational, and personal backgrounds. Inclusion criteria for participation required individuals to have a minimally A2 level (low intermediate) of English literacy skills and higher (C1 professional proficiency and/or C2 fluent).

2. Goal-driven tasks:

Participants were instructed to complete two goal driven tasks evaluating the onboarding experience. According to Toptal (2022), designing an effective user onboarding experience is absolutely critical for helping users understand what the product has to offer and if the product aligns with their individual needs and expectations.

Toptal goes on to say that “nearly one in four users will abandon a mobile app after using it just once… It is highly likely that the users that ditched thought they would get something valuable from the app and weren’t immediately convinced.”

3. Materials and Tools:

3. Materials and Tools:

4. Evaluation schema:

4. Evaluation schema:

5. User Flow diagram, Participants using “Speaking Boost” feature via the Speakly mobile app

5. User Flow diagram, Participants using “Speaking Boost” feature via the Speakly mobile app

5. Low-Fidelity Prototype in Sketch Workspace

6. Low-Fidelity Prototype in Sketch Workspace

6. Results from user testing sessions with low fidelity prototype

General Summary of Feedback and Results

- Participants could move seamlessly through tasks without friction or major barriers

- Onboarding screens and functionality were clear and effective

- Deciding and dedicating amount of specified study time was uncertain

- There was a need for reminders, but participants wished the reminders were "smarter"

- Strong preference for asynchronous practice mode where they could record themselve

- Task 1: 3 min 4 seconds completion time, 0 errors on avg., 100% completion rate

- Task 2: 4 min 11 seconds completion time, 0 errors on avg., 100% completion rate

- SUS Score: 80.62 (Good) in terms of user experience and satisfaction

7. Results from user testing sessions with low fidelity prototype

General Summary of Feedback and Results

- Participants could move seamlessly through tasks without friction or major barriers

- Onboarding screens and functionality were clear and effective

- Deciding and dedicating amount of specified study time was uncertain

- There was a need for reminders, but participants wished the reminders were "smarter"

- Strong preference for asynchronous practice mode where they could record themselve

- Task 1: 3 min 4 seconds completion time, 0 errors on avg., 100% completion rate

- Task 2: 4 min 11 seconds completion time, 0 errors on avg., 100% completion rate

- SUS Score: 80.62 (Good) in terms of user experience and satisfaction

Reflection and Understanding Feedback

- Asychronous recording practice was very useful, but participants needed to be better informed as to how this new feature worked and what they should expect. This could have been achieved by use of a demo video, animations, or instructions. Havinhg to speak right away without understanding what happens next felt a bit intimidating and participants felt a reduced sense of control. There was a need to integrate more support for user self-direction.

- Participants wished they could be better informed as to how the process of submitting audio for review and feedback functions. They felt unsure as to how long it would take to get their recording "graded" by the tutor for evaluation. There was a stronger need to communicate the status and duration. This could be achieved through more in-depth feedback data through charts or dashboards.

- There was a need for better communicating the structure of learning plans and how often they would need to study to reach specific goals. Participants desired a more customizable and personalized framework for studying that meets their goals, but they were not so interested in reminders. Users should be able to feel a sense of achievement through their interactions, experience, and understanding of the app through all UX touchpoints.

Translating User Feedback to Design Changes and Decisions

- Demonstration (video, animation, or infographic) for the new "Speaking Boost" feature: Before new users move-on to take part in asynchronous speaking practice exercises for the first time, they are first presented with an overlay where they can choose to see a short, informative demonstration of this feature.

- Add screen to explain feedback and “Feedback Dashboard” for the “Speaking Boost Feature”: It’s important to communicate the value of the asynchronous audio exercises in terms of how learners can improve on their English-speaking skills. That said, users can better understand what is meant by “feedback” through an FAQ-style, step-by-step infographic. This should provide a clear explanation as to how feedback is broken down based on assessment categories (e.g., grammar errors, vocabulary errors, pronunciation errors, etc.

Reflection and Understanding Feedback

- Asychronous recording practice was very useful, but participants needed to be better informed as to how this new feature worked and what they should expect. This could have been achieved by use of a demo video, animations, or instructions. Having to speak right away without understanding what happens next felt a bit intimidating and participants felt a reduced sense of control. There was a need to integrate more support for user self-direction.

- Participants wished they could be better informed as to how the process of submitting audio for review and feedback functions. They felt unsure as to how long it would take to get their recording "graded" by the tutor for evaluation. There was a stronger need to communicate the status and duration. This could be achieved through more in-depth feedback data through charts or dashboards.

- There was a need for better communicating the structure of learning plans and how often they would need to study to reach specific goals. Participants desired a more customizable and personalized framework for studying that meets their goals, but they were not so interested in reminders. Users should be able to feel a sense of achievement through their interactions, experience, and understanding of the app through all UX touchpoints.

Translating User Feedback to Design Changes and Decisions

- Demonstration (video, animation, or infographic) for the new "Speaking Boost" feature: Before new users move-on to take part in asynchronous speaking practice exercises for the first time, they are first presented with an overlay where they can choose to see a short, informative demonstration of this feature.

- Add screen to explain feedback and “Feedback Dashboard” for the “Speaking Boost Feature”: It’s important to communicate the value of the asynchronous audio exercises in terms of how learners can improve on their English-speaking skills. That said, users can better understand what is meant by “feedback” through an FAQ-style, step-by-step infographic. This should provide a clear explanation as to how feedback is broken down based on assessment categories (e.g., grammar errors, vocabulary errors, pronunciation errors, etc.)

DESIGNING THE HIGH FIDELITY PROTOTYPE

Using the feedback recieved from participants during the low-fidelity testing sessions, the low fidelity prototype was refined to improve certain aspects of the design. This included: more clearly demonstrating how features work in terms of their functionality to match user expectations; more personalized learning experience that suits the needs and interests of learners; and the ability to get useful and objective feedback from tutors about one's performace in speaking. The high fidelity prototype was designed using Figma.

DESIGNING THE HIGH FIDELITY PROTOTYPE

Using the feedback recieved from participants during the low-fidelity testing sessions, the low fidelity prototype was refined to improve certain aspects of the design.

This included: more clearly demonstrating how features work in terms of their functionality to match user expectations; more personalized learning experience that suits the needs and interests of learners; and the ability to get useful and objective feedback from tutors about one's performace in speaking.

Demonstration of interactive prototype in Figma

Demo of interactive prototype in Figma

8. Evaluation Process

An assessment based and validation based approach was carried out via expert evaluation following the guidelines and procedures outlined by Goodman et. al (2012). This meaning, “to test features during implementation,” and “to certify that features meet certain standards and benchmarks in the evaluative process” (pp. 274). Four participants in total to capture a wide range of insights and perspectives about our high-fidelity prototype in terms of the features, functionality, technical limitations, and market viability. We followed the “Creating Tasks” framework outlined by Goodman et. al (2012, pp. 283) when designing three tasks that our experts had to complete to navigate through and evaluate our high-fidelity prototype. These tasks were: reasonable, defined in terms of end goals, specific, doable, in a realistic sequence, domain neutral, and of reasonable length.

The tasks were designed to emulate a “real world” user experience whereby users would interact with the product in pursuit of their end goal(s) (i.e., improving upon their English language speaking skills). However, at this point of the design process we were mainly concerned with collecting more qualitative and experiential data versus pure usability data / statistics such as time-on-task and task completion time as was prioritized in our previous low-fidelity evaluation. That said, the process employed the Concurrent-Think Aloud (CTA) method, goal driven tasks, and a short-answer open-ended post-test questionnaire.

Goal-Driven Tasks

8. Evaluation Process

An assessment based and validation based approach was carried out via expert evaluation following the guidelines and procedures outlined by Goodman et. al (2012). This meaning, “to test features during implementation,” and “to certify that features meet certain standards and benchmarks in the evaluative process” (pp. 274).

Four participants in total to capture a wide range of insights and perspectives about our high-fidelity prototype in terms of the features, functionality, technical limitations, and market viability.

We followed the “Creating Tasks” framework outlined by Goodman et. al (2012, pp. 283) when designing three tasks that our experts had to complete to navigate through and evaluate our high-fidelity prototype.

The tasks were: reasonable, defined in terms of end goals, specific, doable, in a realistic sequence, domain neutral, and of reasonable length.

Tasks were designed to emulate a “real world” user experience whereby users would interact with the product in pursuit of their end goal(s) (i.e., improving upon their English language speaking skills).

However, at this point of the design process we were mainly concerned with collecting more qualitative and experiential data versus pure usability data / statistics such as time-on-task and task completion time as was prioritized in our previous low-fidelity evaluation.

That said, the process employed the Concurrent-Think Aloud (CTA) method, goal driven tasks, and a short-answer open-ended post-test questionnaire.

Goal-Driven Tasks

FEEDBACK FROM EXPERTS

In completing the goal-driven tasks and post-test, semi-structured interview, the following insights were gathered.

FEEDBACK FROM EXPERTS

In completing the goal-driven tasks and post-test, semi-structured interview, the following insights were gathered.

Flow 1: Onboarding and create user account

- No major issues aside from being unable to swipe natively through onboarding screens (push)

- Better support for native UI interactions – Allow for thumb swipe versus arrow only

- Login using Google credentials was preferred and positively assessed

- Overall assessment: Easy, straightforward, and quick while being informative

Flow 2: Offline recorded asynchronous exercises

- Minor confusion, but no major issues as the tab bar menu did not indicate participant's location on home screen

- "Learn more" option was useful and helped "take away all the fears" as to what to expect

- Wished that the speaking exercises provided time / duration information (How long must the recording be?)

- Most interest was to speak about topics that were "light" and less serious or political

- The fact that a "real" tutor would review the recording was positive

- Overall assessment: Experts were positive and receptive to the usefulness of this feature

Flow 3: 1-on-1 Tutoring sessions with a live tutor

- Several issues should be improved for UX when it comes to clarity and effectiveness

- The use of a modal view versus continuous flow to the new screen would be less disruptive

- Some parts of the design were inconsistent with other screens

- Uncertainty as to why certain tutors were showcased versus others

- Booking feature was useful but should have allowed for multiple booking options based on availability

- Overall assessment: This feature needed to be redesigned to meet the UX standards of other features

Flow 4: Group Chat casual Lounge sessions

- No major issues and was viewed positively by both experts in terms of interest and utility

- Design was similar and consistent with other products / platforms like Google Meet

- The feature was relatable to Twitter spaces which made experts feel more secure

- Very enthusiastic about the fact there was a tutor moderator for the session

- Overall assessment: Experts were positive and did not encounter any UX issues

Flow 1: Onboarding and create user account

- No major issues aside from being unable to swipe natively through onboarding screens (push)

- Better support for native UI interactions – Allow for thumb swipe versus arrow only

- Login using Google credentials was preferred and positively assessed

- Easy, straightforward, and quick while being informative

Flow 2: Offline recorded asynchronous exercises

- Minor confusion, but no major issues as the tab bar menu did not indicate participant's location on home screen

- "Learn more" option was useful and helped "take away all the fears" as to what to expect

- Wished that the speaking exercises provided time / duration information (How long must the recording be?)

- Most interest was to speak about topics that were "light" and less serious or political

- The fact that a "real" tutor would review the recording was positive

- Experts were positive and receptive to the usefulness of this feature

Flow 3: 1-on-1 Tutoring sessions with a live tutor

- Several issues should be improved for UX when it comes to clarity and effectiveness

- The use of a modal view versus continuous flow to the new screen would be less disruptive

- Some parts of the design were inconsistent with other screens

- Uncertainty as to why certain tutors were showcased versus others

- Booking feature was useful but should have allowed for multiple booking options based on availability

- This feature needed to be redesigned to meet the UX standards of other features

Flow 4: Group Chat casual Lounge sessions

- No major issues and was viewed positively by both experts in terms of interest and utility

- Design was similar and consistent with other products / platforms like Google Meet

- The feature was relatable to Twitter spaces which made experts feel more secure

- Very enthusiastic about the fact there was a tutor moderator for the session

- Experts were positive and did not encounter any UX issues

KEY INSIGHTS FOR DESIGN & FUNCTIONALITY

![]() Being able to determine the quality of the tutors was important (1-on-1 Tutoring Sessions)

Being able to determine the quality of the tutors was important (1-on-1 Tutoring Sessions)

"I may not be learning a language at the moment, but I do like the idea that I am able to connect with tutors. But, it really depends on the quality and the pricing of the tutors, how easy it is to cancel if I don’t like a tutor. I have all these worries and I wonder if a tutor is not good quality or flakey, I wonder what rights I may have. Am I able to opt out whenever I want?" – Expert 1

- Finding a a "good" and "reliable" experience from a customer perspective was of high priority

- Users want to get a sense of what to expect in tutor qualifications, education, and professional experience

- Valued having third-party validation from past students (social proof) from reviews

![]() Strong support for autonomous learning through asynchronous practice (Offline Speaking Exercises)

Strong support for autonomous learning through asynchronous practice (Offline Speaking Exercises)

“I think the app was simple, but in a very good way. You don’t have to do too much to be able to use it. It’s nice to have an asynchronous option. That’s really important because of time zones, busy lives, it’s nice to say ‘Tomorrow at 11 am I am going to practice,’ so it’s continuity. You can be really serious about something and say that every morning I am gonna speak English for half an hour and improve. It’s your own schedule.” – Expert 1

- Low barrier to start learning the app and get-going towards one's goals

- Meets contextual needs of diverse learners and allows for flexibility and convenience of use

- Product is easily scalable as tutors can be sourced from anywhere in the world

- Allows for a personalized experience where students can find their best tutor match

![]() Group learning environments should be accessible and well-regulated (Group Lounge Sessions)

Group learning environments should be accessible and well-regulated (Group Lounge Sessions)

“I do really like this idea of this speaking and listening focused app with others because that would allow you to pop into an actual conversation. I love that you have a moderator, because then it would make sure that everyone can say something and could correct your mistakes or help you say something a different way. I would love to see the lounges. It could be very interesting.” – Expert 2

- Should be clear how many students (limit) could partake in each group concersation

- Clearly communicate what level of fluency is required to join a specific group (e.g., A0, A1, etc.)

- Allow for students to participate equitably based on their experience levels, avoid overwhelm

- Keep fluency levels standard for group conversation cohesion, students feel less intimidated

![]() User Interface (UI) and related functionalities (UX) allowed for a positive and enjoyable experience

User Interface (UI) and related functionalities (UX) allowed for a positive and enjoyable experience

“I liked that there was not too much going on or too many buttons that were distracting. If I compare this to Duolingo, it had badges, it had shiny things, it had paths, it forces me to choose to go left or right, but I don’t want to choose, I just want to start practicing learning a language. I like that this was very straightforward and asked: ‘Do you want to practice offline?’ and here’s a nice little list of cool options and the question of free-form chatting. I liked how it walked me through what I had to do, it was very clear the whole time, I never actually got confused while using the app." – Expert 2

- Overall assessment was a generally well-designed and reliably functional application

- Allowed users to quickly work towards their goals with low learnability and upfront effort

- Users felt informed about how the app worked in all stages of the design

- General sense of user trust, freedom, and control was high which increased perceived usefulness

KEY INSIGHTS FOR DESIGN & FUNCTIONALITY

![]() Being able to determine the quality of the tutors was important (1-on-1 Tutoring Sessions)

Being able to determine the quality of the tutors was important (1-on-1 Tutoring Sessions)

"I may not be learning a language at the moment, but I do like the idea that I am able to connect with tutors. But, it really depends on the quality and the pricing of the tutors, how easy it is to cancel if I don’t like a tutor. I have all these worries and I wonder if a tutor is not good quality or flakey, I wonder what rights I may have. Am I able to opt out whenever I want?" – Expert 1

- Finding a a "good" and "reliable" experience from a customer perspective was of high priority

- Users want to get a sense of what to expect in tutor qualifications, education, and professional experience

- Valued having third-party validation from past students (social proof) from reviews

![]() Strong support for autonomous learning through asynchronous practice (Offline Speaking Exercises)

Strong support for autonomous learning through asynchronous practice (Offline Speaking Exercises)

“I think the app was simple, but in a very good way. You don’t have to do too much to be able to use it. It’s nice to have an asynchronous option. That’s really important because of time zones, busy lives, it’s nice to say ‘Tomorrow at 11 am I am going to practice,’ so it’s continuity. You can be really serious about something and say that every morning I am gonna speak English for half an hour and improve. It’s your own schedule.” – Expert 1

- Low barrier to start learning the app and get-going towards one's goals

- Meets contextual needs of diverse learners and allows for flexibility and convenience of use

- Product is easily scalable as tutors can be sourced from anywhere in the world

- Allows for a personalized experience where students can find their best tutor match

![]() Group learning environments should be accessible and well-regulated (Group Lounge Sessions)

Group learning environments should be accessible and well-regulated (Group Lounge Sessions)

“I do really like this idea of this speaking and listening focused app with others because that would allow you to pop into an actual conversation. I love that you have a moderator, because then it would make sure that everyone can say something and could correct your mistakes or help you say something a different way. I would love to see the lounges. It could be very interesting.” – Expert 2

- Should be clear how many students (limit) could partake in each group concersation

- Clearly communicate what level of fluency is required to join a specific group (e.g., A0, A1, etc.)

- Allow for students to participate equitably based on their experience levels, avoid overwhelm

- Keep fluency levels standard for group conversation cohesion, students feel less intimidated

![]() User Interface (UI) and related functionalities (UX) allowed for a positive and enjoyable experience

User Interface (UI) and related functionalities (UX) allowed for a positive and enjoyable experience

“I liked that there was not too much going on or too many buttons that were distracting. If I compare this to Duolingo, it had badges, it had shiny things, it had paths, it forces me to choose to go left or right, but I don’t want to choose, I just want to start practicing learning a language. I like that this was very straightforward and asked: ‘Do you want to practice offline?’ and here’s a nice little list of cool options and the question of free-form chatting. I liked how it walked me through what I had to do, it was very clear the whole time, I never actually got confused while using the app." – Expert 2

- Overall assessment was a generally well-designed and reliably functional application

- Allowed users to quickly work towards their goals with low learnability and upfront effort

- Users felt informed about how the app worked in all stages of the design

- General sense of user trust, freedom, and control was high which increased perceived usefulness

CONCLUSION

In researching and designing the Speaking Boost feature for the Speakly app, we more effectively reached our goals in enhancing the language-speaking experience for English as a Second-Language (ESL) and English as a Foreign Language (EFL) learners. This was demonstrated through in-depth qualitative analysis, usability testing, design iteration as informed by research insights, and expert review.

Revisiting the Research Questions

1. When it comes to motivation and engagement amongst adult ESL and EFL learners, confindence, convenience, and applied speaking practice play a major role in personal success.

A lack of consistent applied speaking practice opportunities with native speakers leaves people feeling demotivated as they feel "What's the point of learning if I can't use it?" At the same time, this creates a negative feedback loop where lack of speaking practice leaves people feeling less confident in their skills and anxious about making mistakes. This creates a mental barrier to practice speaking more often which would positively increase skills and confidence.

At the same time, adult learners desire a means of learning that fit within their personal contexts and everyday busy lives. The traditional "classroom" setting is not feasible for most people who are working, studying, and/or raising a family. Meanwhile, adult learners desire programs that focus on topics that interest them (e.g., hobbies and leisure, professional skills and work, etc.) versus seemingly random topics that are imposed upon them in a cirriculum.

2. Based on the results, despite the name "Speakly," the service falls short of providing practical applied speaking practice that emulates real-world conversational experiences.

In our proposal for the "Speaking Boost" feature, it allows for multi-modal remote speaking opportunities that take place either in real-time or offline through recorded exercises. This caters to personalization by situating this feature within the contextual needs of users. Based on the results, people valued both being able to converse in real time with a tutor, but also in tandem (asynchronously) through recorded exercises.

3. With regard to "Self Determination Theory' (Deci and Ryan, 2013) increasing student motivation and engagement can be achieved through the "Speaking Boost" feature as it integrates and supports (1) learner autonomy; (2) self-efficacy; and (3) relatedness.

ESL and EFL learners are able to exercise autonomy through independent and self-directed learning in a remote, self-paced and highly personalized learning environment. This allows learners to feel in greater control of how they learn. Allowing for increased speaking practice opportunities, it supports feelings of competence and one's ability to achieve their speaking goals. Lastly, learners can socially connect with a tutor one-on-one as well as fellow learners like them which generates a sense of connection with others. All of these factors combined, creates a positive and motivating language learning environment.

CONCLUSION

In researching and designing the Speaking Boost feature for the Speakly app, we more effectively reached our goals in enhancing the language-speaking experience for English as a Second-Language (ESL) and English as a Foreign Language (EFL) learners. This was demonstrated through in-depth qualitative analysis, usability testing, design iteration as informed by research insights, and expert review.

Revisiting the Research Questions

1. When it comes to motivation and engagement amongst adult ESL and EFL learners, confindence, convenience, and applied speaking practice play a major role in personal success.

A lack of consistent applied speaking practice opportunities with native speakers leaves people feeling demotivated as they feel "What's the point of learning if I can't use it?" At the same time, this creates a negative feedback loop where lack of speaking practice leaves people feeling less confident in their skills and anxious about making mistakes. This creates a mental barrier to practice speaking more often which would positively increase skills and confidence.

At the same time, adult learners desire a means of learning that fit within their personal contexts and everyday busy lives. The traditional "classroom" setting is not feasible for most people who are working, studying, and/or raising a family. Meanwhile, adult learners desire programs that focus on topics that interest them (e.g., hobbies and leisure, professional skills and work, etc.) versus seemingly random topics that are imposed upon them in a curriculum.

2. Based on the results, despite the name "Speakly," the service falls short of providing practical applied speaking practice that emulates real-world conversational experiences.

In our proposal for the "Speaking Boost" feature, it allows for multi-modal remote speaking opportunities that take place either in real-time or offline through recorded exercises. This caters to personalization by situating this feature within the contextual needs of users. Based on the results, people valued both being able to converse in real time with a tutor, but also in tandem (asynchronously) through recorded exercises.

3. With regard to "Self Determination Theory' (Deci and Ryan, 1985) increasing student motivation and engagement can be achieved through the "Speaking Boost" feature as it integrates and supports (1) learner autonomy; (2) self-efficacy; and (3) social relatedness.

ESL and EFL learners are able to exercise autonomy through independent and self-directed learning in a remote, self-paced and highly personalized learning environment. This allows learners to feel in greater control of how they learn. Allowing for increased speaking practice opportunities, it supports feelings of competence and one's ability to achieve their speaking goals. Lastly, learners can socially connect with a tutor one-on-one as well as fellow learners like them which generates a sense of connection with others. All of these factors combined, creates a positive and motivating language learning environment.

REFERENCES

1. Debenham, M. (2007). Epistolary interviews on-line: A novel addition to the researcher’s palette. JISC TechDis. https://www.debenham.org.uk/personal/Epistolary_Interviews_On-line.pdf

2. Deci, E. L., & Ryan, R. M. (2013). Intrinsic motivation and self-determination in human behavior. Springer Science & Business Media.

3. Design Kit. (2022). Interview Methods. From https://www.designkit.org/methods/2

4. Goodman, E., Kuniavsky, M., & Moed A, (2012). Observing the user experience: A practitioner's guide to user research. Elsevier.

5. McMaster University. (2021, April). Qualitative Research at a Distance. Spark - McMaster University. Retrieved September 26, 2022, from https://spark.mcmaster.ca/documents/pandemic-friendly-qualitative-methods.pdf

6. Nielsen Norman Group. (2018). User Interviews: How, When, and Why to Conduct Them. From https://www.nngroup.com/articles/user-interviews/

REFERENCES

1. Debenham, M. (2007). Epistolary interviews on-line: A novel addition to the researcher’s palette. JISC TechDis. https://www.debenham.org.uk/personal/Epistolary_Interviews_On-line.pdf

2. Deci, E. L., & Ryan, R. M. (2013). Intrinsic motivation and self-determination in human behavior. Springer Science & Business Media.

3. Design Kit. (2022). Interview Methods. From https://www.designkit.org/methods/2

4. Goodman, E., Kuniavsky, M., & Moed A, (2012). Observing the user experience: A practitioner's guide to user research. Elsevier.

5. McMaster University. (2021, April). Qualitative Research at a Distance. Spark - McMaster University. Retrieved September 26, 2022, from https://spark.mcmaster.ca/documents/pandemic-friendly-qualitative-methods.pdf

6. Nielsen Norman Group. (2018). User Interviews: How, When, and Why to Conduct Them. From https://www.nngroup.com/articles/user-interviews/